by DotNetNerd

13. July 2016 07:19

One of the nice features of functional programming languages like F# is the lack of null. Not having to check for null every where makes code a lot less errorprone. As the saying goes "What can C# do that F# cannot?" NullReferenceException". Tony Hoare who introduced null references in ALGOL even calls it his billion-dollar mistake. The thing is that although this is quite a known problem, it is not trivial to introduce non nullable types into an existing language, as Anders Hejlsberg talked about when I interviewed him at GOTO back in 2012.

One of the nice features of functional programming languages like F# is the lack of null. Not having to check for null every where makes code a lot less errorprone. As the saying goes "What can C# do that F# cannot?" NullReferenceException". Tony Hoare who introduced null references in ALGOL even calls it his billion-dollar mistake. The thing is that although this is quite a known problem, it is not trivial to introduce non nullable types into an existing language, as Anders Hejlsberg talked about when I interviewed him at GOTO back in 2012.

With version 2.0 of TypeScript we do get non-nullable types, which has been implemented as a compiler switch --strictNullChecks. More...

by DotNetNerd

26. May 2016 11:27

One of the really nice things about Azure Webapps is the support for running Webjobs. Most large webapplications will at some point need some data or media processed by a background process, and for that Webjobs are a perfect fit.

One of the really nice things about Azure Webapps is the support for running Webjobs. Most large webapplications will at some point need some data or media processed by a background process, and for that Webjobs are a perfect fit.

More...

by dotnetnerd

11. May 2016 12:49

A few weeks ago Microsoft introduced the concept of "Cool" Blob Storage on Azure, which means that you get REALLY cheap storage for data that you don't access very often - backup being an obvious usecase. In my case I have used Dropbox for backups for a while, and although it works fine for a certain amount of data, it is not really a good fit for backing up that family photos and videos once a year from the home NAS.

A few weeks ago Microsoft introduced the concept of "Cool" Blob Storage on Azure, which means that you get REALLY cheap storage for data that you don't access very often - backup being an obvious usecase. In my case I have used Dropbox for backups for a while, and although it works fine for a certain amount of data, it is not really a good fit for backing up that family photos and videos once a year from the home NAS.

More...

by DotNetNerd

29. March 2016 12:59

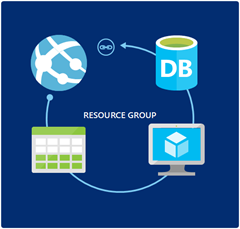

A core value that Azure brings to modern projects, is to enable developers to take control of the deployment process, and make it fast and painless. Sure scalability is nice, when and if you need it, but the speed and flexibility in setting up an entire environment for your application is always valuable - so for me this is a more important feature of Azure. Gone are the days of waiting at best days, most likely weeks and maybe even months for the IT department to create a new development or test environment.

A core value that Azure brings to modern projects, is to enable developers to take control of the deployment process, and make it fast and painless. Sure scalability is nice, when and if you need it, but the speed and flexibility in setting up an entire environment for your application is always valuable - so for me this is a more important feature of Azure. Gone are the days of waiting at best days, most likely weeks and maybe even months for the IT department to create a new development or test environment.

More...

by dotnetnerd

11. February 2016 14:08

Visual Studio Online was recently renamed Visual Studio Team Services, which more accurately tells you what it is about. Sure, you can still browse and edit code, but it is just one feature, and not really a core one at that. On my current project I have had the chance to dive in a little deeper, and have a look at some of the features that VSTS has to offer. Although I have often been critical of these kinds of products, VSTS has been mostly a pleasent acquaintance.

Visual Studio Online was recently renamed Visual Studio Team Services, which more accurately tells you what it is about. Sure, you can still browse and edit code, but it is just one feature, and not really a core one at that. On my current project I have had the chance to dive in a little deeper, and have a look at some of the features that VSTS has to offer. Although I have often been critical of these kinds of products, VSTS has been mostly a pleasent acquaintance.

More...

by DotNetNerd

21. December 2015 14:11

So it's that time again. Another year has past, and like so many others I want to take a little time to look back at how I have spent my nerdy hours. Most importantly it has been my second years as an independent consultant, and I am still enjoying the freedom and the chance to work on projects that are very different. More...

by DotNetNerd

11. December 2015 11:17

Xplat has been the big topic around ASP.NET 5, but to be honest it does not matter that much for many of us. Sure it is always nice to have options, but if you work in a Microsoft shop or at an enterprise who see them selves as based on Microsoft technologies, then that is not likely to change - and why should it? The good news is that taking the framework and tooling apart to enable these options also brings a more aligned and flexible architecture, that solves some of the issues with eg. build setups that we have today. With that said, xplat is not all that ASP.NET 5 is about.

More...

by DotNetNerd

8. November 2015 14:12

With ASP.NET 5 which is currently in Beta 8, Microsoft has launched a new web server named Kestrel, which is of course Open Source. There are a number of reasons they are building Kestrel, but most importantly to provide a cross-platform web server which does not rely on System.Web and a full version of the CLR in order to bootstrap the new execution environment (DNX) and CoreCLR - which was not possible with Helios.

More...

by dotnetnerd

7. October 2015 08:10

After lunch I picked a talk by Dave Thomas on Fast data - tools and peopleware. Dave threw some punches at SCRUM and OO languages, while describing the challenges of handeling the huge amounts of data and the variery of devices that we have today. With that he concluded that the amount of serialization and modelling we are doing is hopeless and that going through layers like ODBC is terrible. More...

by dotnetnerd

7. October 2015 08:03

The day started with a keynote by Brian Goetz called "move deliberately and don't break anything", as kind of an answer to Erics talk and going against Mark Zuckerbergs "move fast and break things". He quoted Bob Dylan saying "when you aing got nothing, you got nothing to loose" making the argument that move fast and break things make sence for startups, that have all to gain and not much to loose, but for a mature company with a large userbase it is a different story. He described programming as an economic exercise, where programmer time and pizza is turned into software and technical debt. Building on this he argued pragmatism and that there is no good or bad, this time quoting Yoda saying: there is no good, there is only good for.

More...